Translating English to Geek (and Back): How AI Can Finally Fix User Acceptance Testing

AI is transforming user acceptance testing from a bottleneck into a breakthrough. Learn how Mesh Digital’s AI-powered approach translates English to Geek and back empowering business users, accelerating QA, and redefining quality for the enterprise era.

User Acceptance Testing (UAT) is supposed to be the moment of truth, the place where business meets technology, and users confirm that the shiny new feature in front of them actually works in the messy reality of day-to-day operations. In theory, UAT is where rubber meets road. In practice, it’s often where rubber burns out.

Most organizations still treat UAT as a box-checking exercise. Product owners and business stakeholders are handed scripts they don’t understand, forced into testing environments that feel foreign, and asked to sign off on systems that only half make sense. The result? Delays, bottlenecks, missed edge cases, and tense conversations at go-live.

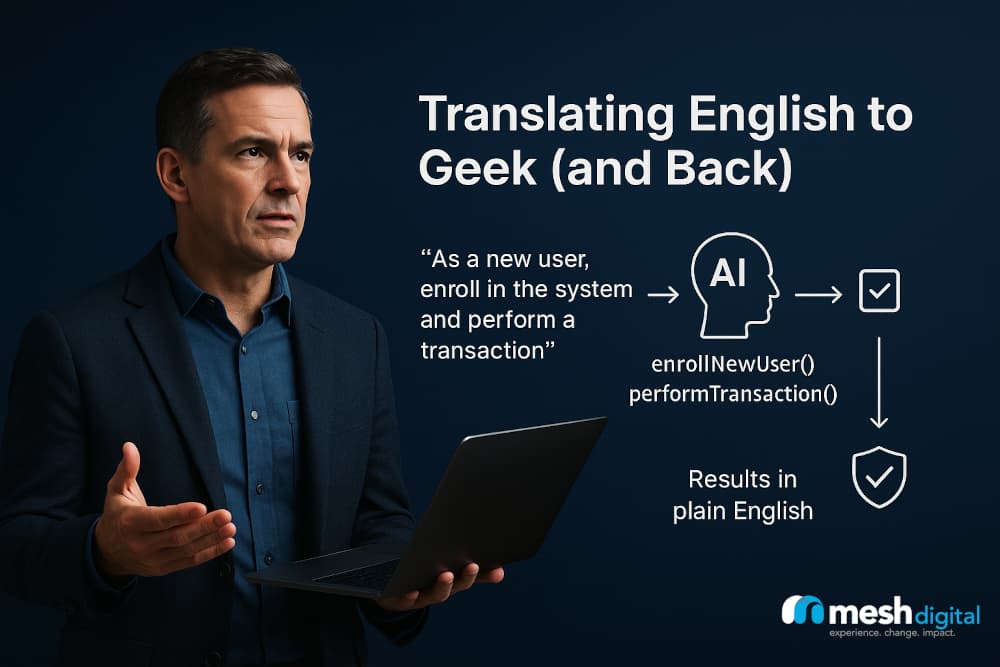

At Mesh Digital, we see this dysfunction as a classic “English vs. Geek” problem. Business users think in stories and outcomes: “I want to log in, complete a transaction, and see a confirmation without glitches.” Engineers think in parameters and functions: loginWithCredentials(), submitTransaction(), verifyConfirmation(). QA lives somewhere in the middle, translating human intent into scripted test steps. The problem is the translation breaks down, especially under release pressure.

Here’s the provocation: AI can finally close this translation gap. Not by automating UAT away, but by reinventing it. Generative AI, when paired with modular test functions and the right orchestration framework, makes it possible to translate English to Geek and back again in real time. That shift doesn’t just simplify testing; it accelerates it, democratizes it, and changes the role of QA entirely.

Why UAT Is Broken (and Everyone Knows It)

Every product team can list the reasons UAT feels like running through mud:

- Business vs. Technical Mindset. UAT testers know the business flow but not the code. They can spot when something feels wrong but not why, leaving them dependent on QA translators.

- QA as Gatekeepers. Test cases are usually prewritten by QA, handed off to business users, and run under supervision. This dependency creates bottlenecks and friction when new scenarios emerge.

- The Time Crunch. UAT windows are notoriously short. With hundreds of scenarios and edge cases, testers are forced to cut corners. The trade-off is always speed versus coverage, and speed usually wins.

We call this the UAT Paradox: the one phase that should build confidence is often the one that erodes it.

Enter AI: From Natural Language to Executable Tests

What if testers could describe scenarios in plain English “As a new customer, enroll in the system, perform a transaction, and confirm the record updates correctly” and see it executed instantly against the live functions?

That’s not science fiction. It’s what happens when you combine:

- Large Language Models (LLMs) that understand natural language and map it to structured actions.

- Modular QA Functions—clear, reusable building blocks like

loginAsNewUser(),executeTransaction(),verifyRecordUpdate(). - A Model Context Protocol (MCP) Server to serve as the bridge, orchestrating calls between user intent and executable test cases.

Here’s the workflow:

- A product owner or business owner writes a scenario in English.

- The LLM parses the intent, decomposes it into test steps, and generates the function calls.

- The MCP server executes the calls, runs the functions, and returns the results—again in plain English.

Suddenly, the non-technical folks no longer needs QA to handhold. They test on their terms, in their language, with full visibility into results. The Geek barrier is removed.

A Provocative Shift: QA Becomes Architects, Not Babysitters

This approach forces a new mindset for QA. Instead of manually scripting long test cases, QA becomes the architects of a modular function library. Their job is to design crisp, reusable, human-readable functions with clear inputs, outputs, and documentation.

Think of it as building a testing API for the business. The goal isn’t to eliminate QA, it’s to elevate them.

Industry Example: Reinventing UAT in a Bank

One global bank we studied faced the same challenge: UAT for its corporate banking portal had become a marathon of manual scripts, Excel trackers, and last-minute firefighting. Business users were frustrated, QA was underwater, and release windows kept slipping.

The solution wasn’t more people, it was a different model:

- Modularized Test Functions. Instead of sprawling test scripts, QA teams refactored tests into reusable functions (

initiateSWIFTTransfer(),verifyFXConversionRate(),confirmRegulatoryReportSubmission()). - Natural Language Scenarios. Business users began describing tests in plain English, which AI translated into callable functions. A corporate treasurer could type, “Initiate a USD-to-EUR payment and confirm settlement under SEPA timelines,” and run the scenario directly.

- Embedded Compliance Checks. Regulatory validations (e.g., Basel III liquidity reporting, AML transaction thresholds) were built into the function library, ensuring that compliance was tested alongside core functionality.

The impact was immediate:

- Sign-off times dropped from weeks to days.

- QA became curators of a function library rather than gatekeepers of scripts.

- Business stakeholders gained confidence because they could run the tests that mattered most to them, in their own language.

This isn’t a banking-only story. Any industry; insurance, healthcare, manufacturing, retail. etc. can apply the same principles. Banking simply demonstrates how critical the payoff can be in high-stakes, highly regulated environments.

Why This Matters Now

You could argue that test automation already exists. True. But automation is Geek-to-Geek. It assumes technical fluency, and it still requires translation from business intent to code.

AI-powered UAT is English-to-Geek-to-English. It allows business testers to express intent directly and see the outcome without an intermediary. That’s not a process tweak, it’s a paradigm shift!

And in regulated industries, where release risks are sky-high, compressing UAT timelines while improving coverage is a competitive differentiator.

Risks and Realities

Let’s not romanticize. This model isn’t plug-and-play. There are hurdles:

- Quality of Functions. If QA doesn’t build clear, reusable functions, the whole system collapses.

- LLM Accuracy. Misinterpretations happen; guardrails are essential.

- Change Management. Business users must trust the system, QA must embrace new roles, and leadership must invest in building the modular library.

But these risks are surmountable. The bigger risk is clinging to a broken UAT model and pretending it works.

The Mesh POV: Orchestrating the English ↔ Geek Flow

At Mesh Digital, we see AI-powered UAT as part of a broader movement: using AI not as a bolt-on but as a translator, orchestrator, and accelerant across the software lifecycle.

Our provocations to the market are clear:

- Stop treating UAT as a bureaucratic checkbox.

- Stop wasting human talent on manual scripts when AI can bridge the gap.

- Stop letting English vs. Geek misunderstandings derail releases.

When you do, UAT stops being a roadblock and starts being a driver of confidence, speed, and quality.

Conclusion: A New Definition of Quality

The future of UAT is not about replacing humans. It’s about empowering them. Business users should be free to test in their language. QA should be free to design intelligent guardrails rather than babysit scripts. Developers should be free to ship with confidence.

AI makes that possible by translating English to Geek and back again, bridging a gap that has slowed us down for decades.

So here’s the provocation we’ll leave you with: If your UAT process still requires a translator in the room, you’re already behind. The organizations that figure out this English ↔ Geek flow will own the future of digital delivery.